Protecting Valuable Data Assets: Lessons Learned from Microsoft’s Accidental Data Exposure

In the ever-evolving landscape of cloud technology, data security remains paramount. Recent events have shed light on the critical importance of safeguarding sensitive information, and today, we delve into a notable incident involving Microsoft’s AI research and the accidental exposure of 38 terabytes of private data. This alarming breach serves as a stark reminder of the challenges organizations face when dealing with massive amounts of training data in the cloud.

Microsoft’s Unintentional Data Disclosure

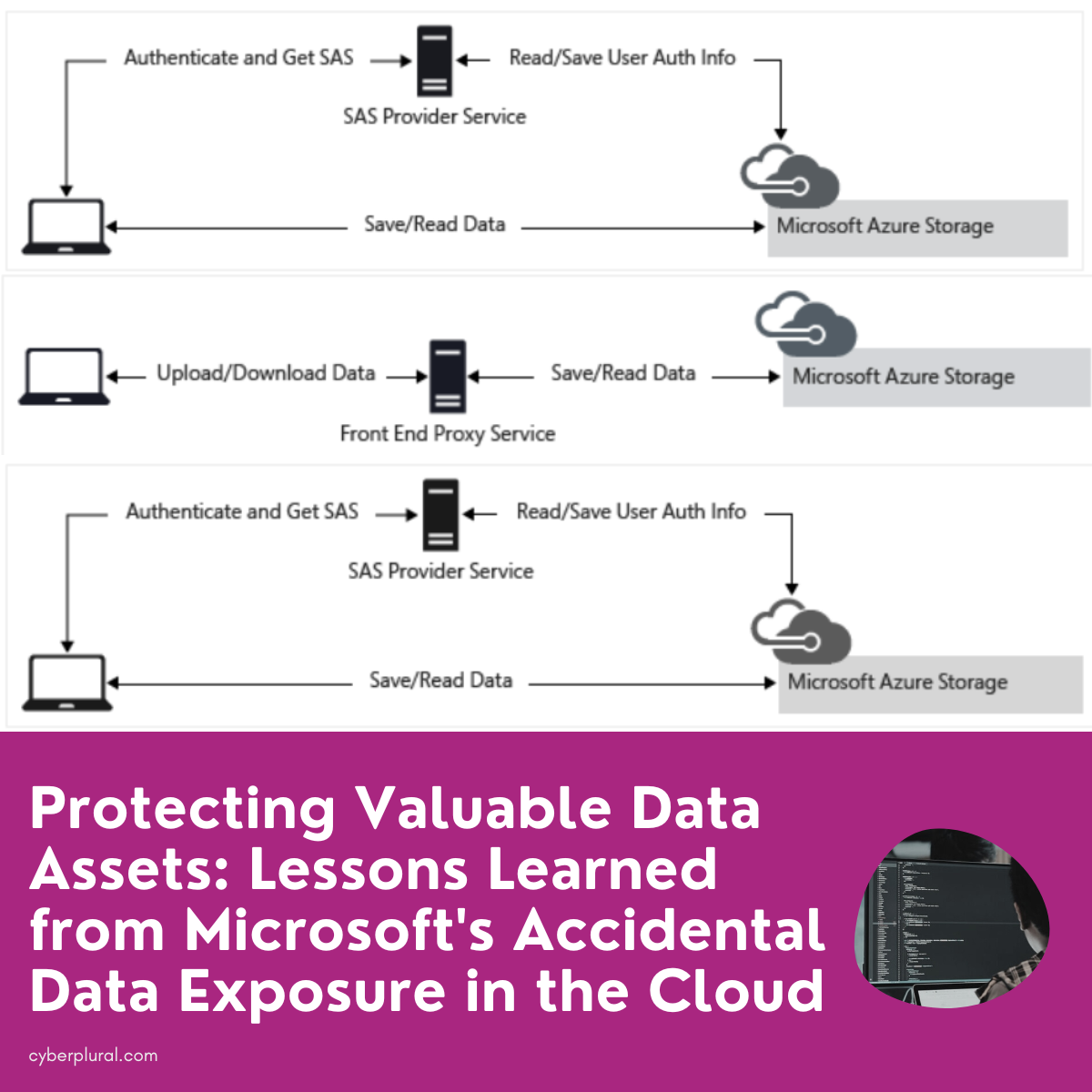

Microsoft’s AI research division, known for its contributions to open-source code and AI models, inadvertently exposed a vast trove of confidential data. The incident occurred when the team shared files via Azure Storage using Shared Access Signature (SAS) tokens—a powerful feature but one that can be fraught with risks if not properly configured. It also included two backups of two former employees’ workstations containing secret keys, passwords, and over. 30,000 internal teams’ messages.

This disclosure became possible as a result of an overly permissive SAS Token – a feature in Azure that allows users to share data in a way that is both hard to track and hard to revoke. The repository’s README.md allows developers to download the model from Azure Storage URL which during the process accidentally gave full access (Read, Write and Delete) to the entire storage account thereby exposing additional private data that is not meant for the public.

What is Shared Access Signature (SAS) Tokens

To comprehend the full scope of this breach, it’s essential to grasp the intricacies of SAS tokens. In Azure, a shared access signature token (SAS) is a signed URL that grants access to specific Azure Storage data. Its access level, permissions, and scope can be precisely configured, but this flexibility comes with potential pitfalls if not handled with caution.

Three Types of SAS Tokens

Azure offers three types of SAS tokens which are:

- Account SAS (Shared Access Signature): Account SAS is an Azure token that grants access to an entire storage account or specific resources within it, allowing actions like reading, writing, and deleting data.

- Service SAS (Shared Access Signature): Service SAS is an Azure token that provides access to specific services or resources within a storage account, with customizable permissions and time limits. It’s a precise way to grant controlled access to only the necessary data or services without exposing the entire account.

- User Delegation SAS (Shared Access Signature): User Delegation SAS is a security-enhanced Azure token that delegates access to storage resources on behalf of a user through Azure Active Directory, offering tighter identity control and auditability. It’s often used for temporary access with reduced risk compared to other SAS types.

In this blog post, we will be focusing on the Account SAS Tokens as it is the type of SAS Tokens that was implicated during the incident.

The Creation Process

Generating an Account SAS token involves configuring its scope, permissions, and expiry date. Behind the scenes, the token is signed with the account key and is downloaded on the client side. This process lacks centralized Azure oversight, making it challenging to track token creation and monitor their use.

The Revocation Challenge

Revoking an Account SAS token is a complex task, as it necessitates rotating the entire account key, rendering all other tokens associated with that key ineffective. This creates a delicate balance between security and convenience, particularly when organizations deal with numerous tokens.

The Scale of the Exposure

An investigation of this incident revealed that the exposed token granted unauthorized access to the entire storage account. This misconfiguration exposed an additional 38 terabytes of data, including personal computer backups of Microsoft employees. The compromised data included passwords, secret keys, and an astounding 30,000 internal Microsoft Teams messages from 359 employees, laying bare the extent of the breach. Furthermore, in addition to the excessively lenient access scope, the token was misconfigured to grant “full control” permissions rather than read-only access. This means that an unauthorized individual could not only view all the files within the storage account but also possess the ability to delete and overwrite existing files.

Unintended Consequences

The gravity of this situation intensifies when we consider that this repository was primarily aimed at providing AI models for training code. Users were instructed to download model data from the SAS link, but here lies a critical vulnerability—these files were formatted using Python’s pickle format (ckpt), inherently susceptible to arbitrary code execution. An attacker could have injected malicious code into the AI models, potentially infecting countless users who trusted Microsoft’s GitHub repository.

NOTE: It is crucial to emphasize that this storage account was not directly accessible to the general public; in reality, it was a privately secured storage account. Microsoft’s developers employed an Azure feature known as “SAS tokens,” which enables the creation of shareable links for accessing Azure Storage account data. Despite this, the result of the analysis shows that the storage account appeared entirely private and inaccessible.

Safeguarding Azure Storage: Best Practices

In light of the security risks posed by SAS tokens, it’s crucial to adopt proactive measures to protect your data and prevent inadvertent exposure.

Token Management

- Limit Account SAS Usage: Given the difficulty in monitoring Account SAS tokens, they should be used sparingly. Avoid relying on them for external sharing.

- Consider Service SAS with Policies: For external sharing, consider Service SAS tokens with Stored Access Policies, providing centralized control and revocation capabilities.

- User Delegation SAS: When sharing content for a limited time, opt for User Delegation SAS tokens, capped at a 7-day expiry.

- Dedicated Storage Accounts: Create dedicated storage accounts for external sharing to minimize the impact of over-privileged tokens.

Monitoring and Visibility

- Enable Storage Analytics Logs: To track token usage, enable Storage Analytics logs for each storage account. These logs provide insights into SAS token access.

- Azure Metrics: Utilize Azure Metrics to monitor SAS token usage in storage accounts, identifying potential security concerns.

- Secret Scanning: Employ secret scanning tools to detect leaked or over-privileged SAS tokens in public assets, such as GitHub repositories, to preempt security breaches.

The Wider Implications

As organizations increasingly embrace AI technologies, security teams must proactively address security risks throughout the AI development process. This incident underscores two significant risks—data oversharing and supply chain attacks. Defining clear guidelines for external data sharing and sanitizing AI models from external sources are crucial steps to mitigate these risks.

Conclusion

The accidental exposure of 38 terabytes of private data by Microsoft’s AI researchers serves as a sobering reminder of the importance of robust security measures, especially when dealing with sensitive data in the cloud. Shared Access Signature (SAS) tokens, while powerful, require diligent configuration and monitoring to prevent inadvertent data exposure. By following best practices and maintaining vigilance, organizations can protect their valuable data assets and safeguard against potential breaches.

By Yakubu Birma and Ahmed Rufai,

Leave a Reply